Crawler

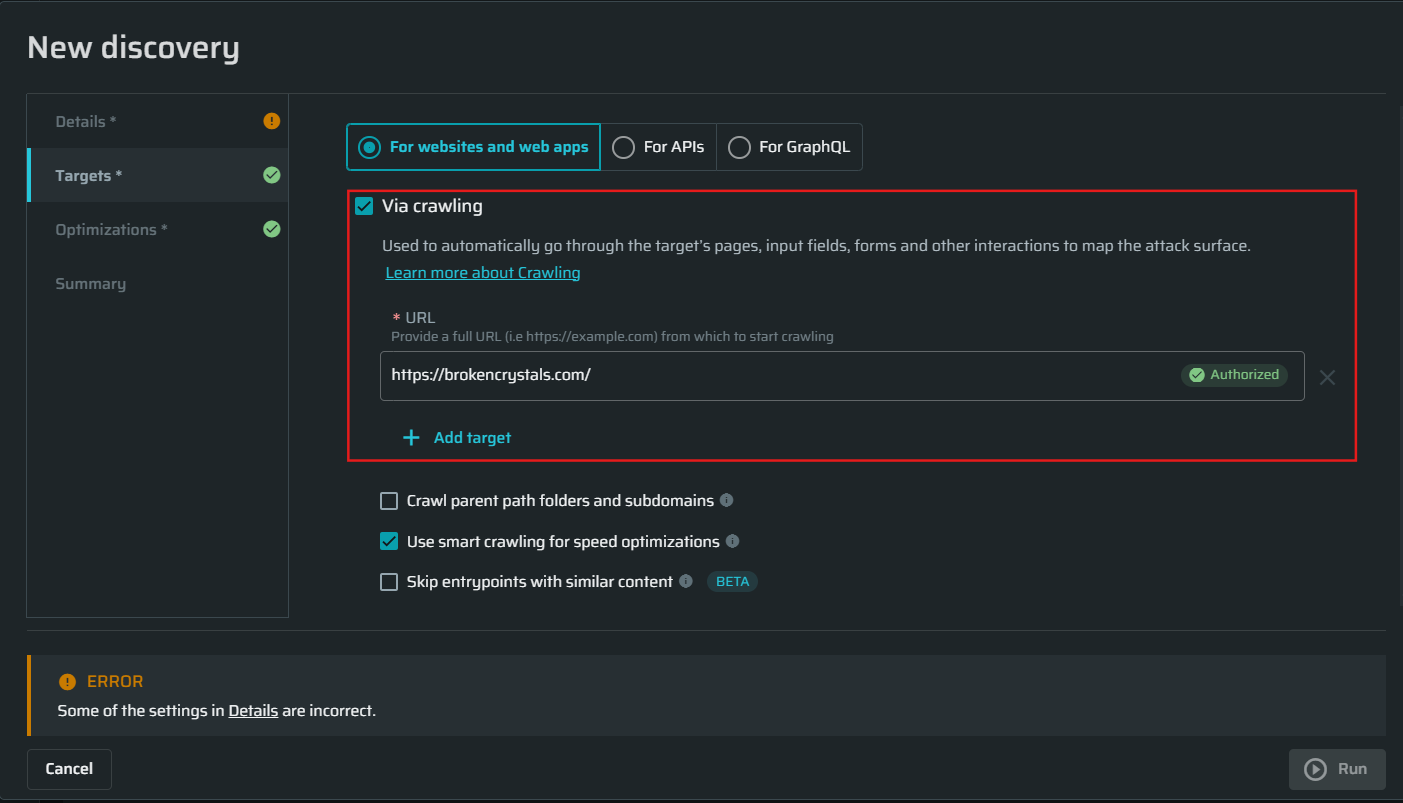

Bright can crawl your web application to define the attack surface. This option does not require any details that might get you tangled. To run a security scan using a crawler, you simply need to specify the target URL in the URL field.

The crawler automatically detects and parses the OpenAPI Specification and GraphQL schemas it encounters. Automatically expands the scan's scope by adding all endpoints defined within the schema, ensuring even "hidden" APIs are tested.

To discover only specific parts of your application, use a relative path like https://foo.bar/example. Entrypoints located under a different domain/path will be skipped.

You can also add multiple URLs by clicking on Add target.

To ensure complete coverage of the discovery, you should configure an authentication object so that the crawler can reach the authenticated parts of the target application. See Authentication for detailed information.

| Pros | Cons |

|---|---|

| Simple usage. You simply need to specify the target host. The crawler will define the attack surface (discovery scope) automatically. | Less coverage. The crawler cannot get through some user-specific forms or provide the required input. It means that the attack surface may be defined incompletely, and Bright will not cover such parts of the application during scanning. |

| Full automation. By default, the crawler automatically covers all the application parts that it can reach. | Limited coverage level. The crawler is applicable only to web pages of the target application and the microservices connected to the application directly. Crawling between the microservices is disabled. |

You can combine full automation with complete coverage by applying both the Crawler and Recorded (.HAR) discovery types for a scan.

Setting coverage exclusions

For setting coverage exclusions when discovering with a crawler, see Entrypoints discovery options.

Updated 7 months ago